Transformers and Pretrained Language Models Explained

Transformers and pretrained language models have been making waves in the field of natural language processing (NLP) and artificial intelligence (AI). These models have become the go-to tools for tasks such as machine translation, sentiment analysis, and even generating human-like responses. In this blog post, we will dive into the details of transformers, explain the concept of pretrained language models, and explore their applications in various domains.

Understanding Transformers

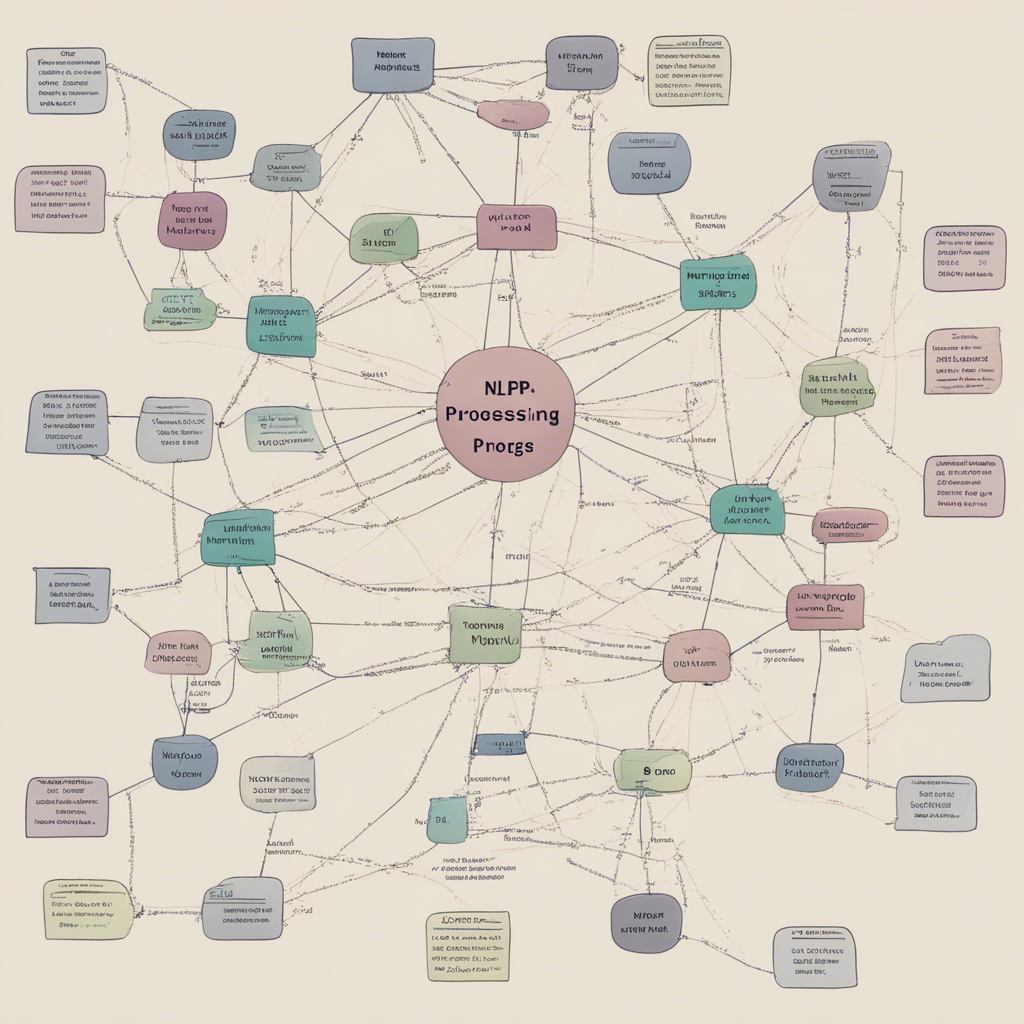

Transformers, introduced by Vaswani et al. in 2017, revolutionized NLP by outperforming traditional recurrent neural networks (RNNs) in many tasks. Unlike RNNs, transformers don’t process sequential data in a sequential manner. Instead, they leverage self-attention mechanisms to analyze the relationships between words.

The key idea behind transformers is the “attention” mechanism, which allows the model to focus on relevant parts of the input sequence. This attention mechanism calculates the importance of each word in the context of the entire sentence, considering both local and global dependencies. By encoding this information into a high-dimensional representation, transformers learn meaningful patterns in the data.

The above diagram illustrates the architecture of a transformer model. It consists of an encoder and a decoder. The encoder takes the input sequence and processes it through multiple self-attention layers, followed by feed-forward neural networks. The decoder generates the output sequence based on the encoded representation and the target sequence.

Pretrained Language Models

Pretrained language models, also known as contextualized word embeddings, are models that are trained on large amounts of text data to learn the relationships between words. These models are typically trained using unsupervised learning on datasets such as Wikipedia, news articles, and books. By leveraging the context in which words appear, pretrained language models can generate rich representations that capture semantic and syntactic nuances.

One popular pretrained language model is BERT (Bidirectional Encoder Representations from Transformers), introduced by Devlin et al. in 2018. BERT employs transformers to capture bidirectional relationships between words, enabling it to understand the meaning of a word based on both its preceding and succeeding words.

Another notable pretrained language model is GPT (Generative Pretrained Transformer), developed by OpenAI. GPT employs a decoder-only transformer architecture and is known for its ability to generate coherent and contextually appropriate text.

Applications of Transformers and Pretrained Language Models

1. Sentiment Analysis

Sentiment analysis involves determining the sentiment or opinion expressed in a piece of text. Transformers and pretrained language models excel at this task due to their ability to understand context and nuances of language. They can identify sentiment not only at the word level but also at the sentence and document level.

2. Machine Translation

Transformers and pretrained language models have greatly improved machine translation systems. These models can learn to translate between languages by training on large multilingual datasets. By leveraging the attention mechanism, transformers can align source and target sentences effectively, enabling accurate and fluent translations.

3. Question Answering

Question answering systems powered by transformers and pretrained language models can understand natural language questions and provide relevant answers. By encoding the question and the context, these models can identify the most appropriate answer within a given document.

4. Text Generation

Pretrained language models like GPT have shown impressive capabilities in generating human-like text. These models can be fine-tuned for specific tasks such as writing poetry, composing music, or even generating code. However, caution must be exercised to ensure ethical use and prevent the generation of inappropriate or misleading content.

Conclusion

Transformers and pretrained language models have emerged as powerful tools in the field of NLP and AI. Their ability to capture semantic relationships and understand context has propelled the development of advanced language processing systems. Whether it’s sentiment analysis, machine translation, question answering, or text generation, these models have revolutionized the way we interact with and process natural language. As research continues to evolve in this domain, we can expect even more exciting applications and breakthroughs.

References:

- Vaswani, A., et al. “Attention is All You Need.” arXiv preprint arXiv:1706.03762 (2017).

- Devlin, J., et al. “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” arXiv preprint arXiv:1810.04805 (2018).