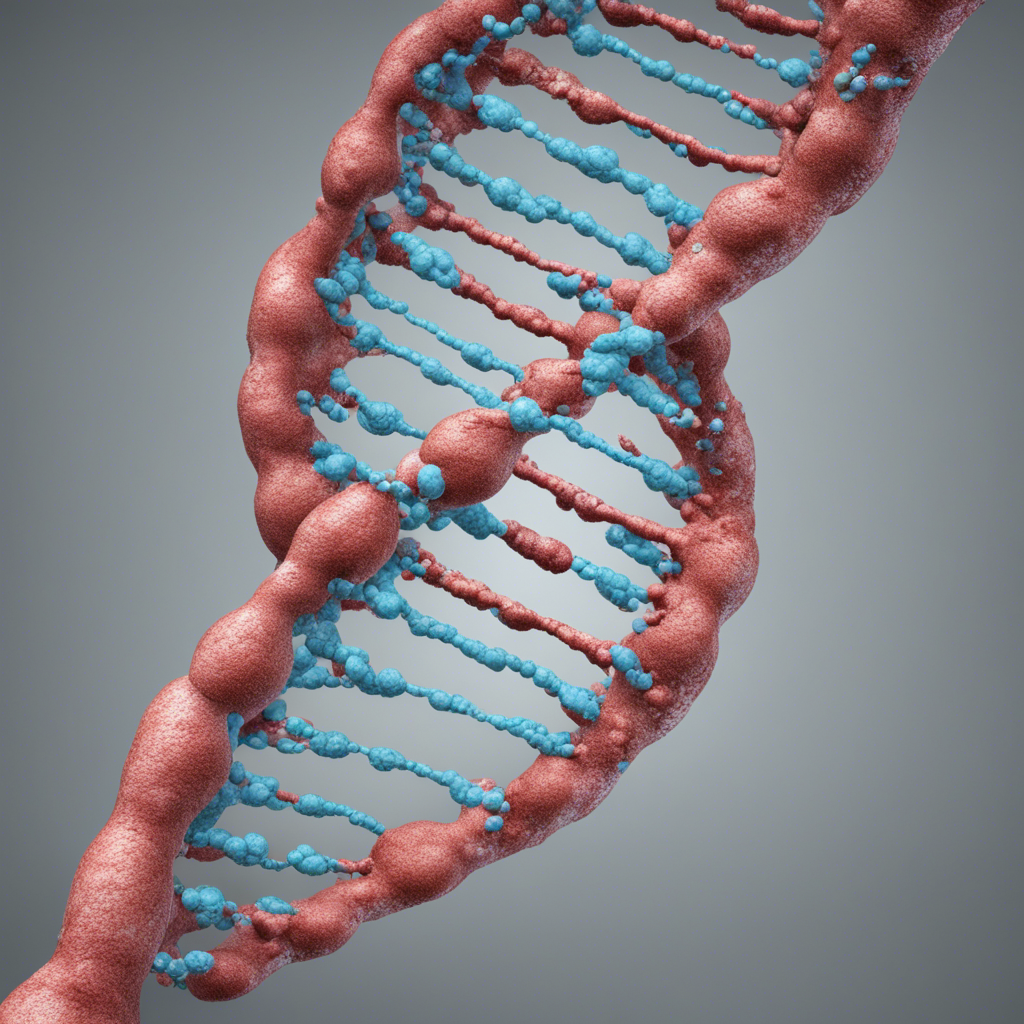

Genetic Algorithms: Natural Selection in ML Optimization

Introduction

In the world of machine learning, optimization plays a crucial role in improving algorithm performance and achieving desired outcomes. One popular technique used for optimization is Genetic Algorithms (GAs). Inspired by the concept of natural selection, GAs are capable of efficiently searching through large solution spaces to find near-optimal solutions. In this blog post, we will delve into the inner workings of GAs, discuss their various components, and explore how natural selection drives optimization in machine learning.

Understanding Genetic Algorithms

Genetic Algorithms draw inspiration from Darwinian evolutionary principles, applying concepts such as selection, crossover, and mutation to optimize solutions in a way that closely emulates natural selection. GAs start with an initial population of potential solutions, which are iteratively improved through multiple generations.

The core components of a Genetic Algorithm are:

1. Population

The population is a collection of individuals, with each individual representing a potential solution to the problem at hand. The size of the population has a significant impact on the effectiveness and efficiency of the algorithm. A larger population allows for a richer exploration of the solution space, increasing the chances of finding near-optimal solutions. However, it also increases the computational cost.

2. Fitness Function

A fitness function evaluates the quality of an individual solution based on predefined criteria. The fitness function assigns a fitness score to each individual, determining how well it performs compared to others in the population. The idea is to guide the search towards better solutions by favoring individuals with higher fitness scores during the selection process.

3. Selection

Selection is the process of choosing individuals from the population to create the next generation. Individuals with higher fitness scores have a higher probability of being selected, mimicking the survival of the fittest in nature. Various selection techniques, such as tournament selection and roulette wheel selection, can be employed to strike a balance between exploration and exploitation of the solution space.

4. Crossover

Crossover is a genetic operator that combines genetic material from two parent individuals to create new offspring. By exchanging segments of their genetic information, the offspring inherits characteristics from both parents. This allows for the exploration of new areas in the solution space. The choice of crossover technique, such as one-point crossover or uniform crossover, can have a significant impact on the algorithm’s performance.

5. Mutation

Mutation is a mechanism that introduces small random changes to the genetic material of an individual. It provides diversity to the population, preventing premature convergence to suboptimal solutions. Mutation can occur at a low probability during the reproduction process and helps the algorithm explore unexplored regions of the solution space.

6. Elitism

Elitism ensures that the best individuals from each generation are preserved without any changes. By copying the elite individuals directly to the next generation, the algorithm prevents the loss of high-quality solutions and maintains diversity in the population.

Natural Selection in ML Optimization

The application of natural selection principles in genetic algorithms provides a powerful method for tackling optimization problems in machine learning. Here are a few reasons why GAs are well-suited for ML optimization:

1. Global Search

Genetic Algorithms have the ability to perform extensive searches across a solution space, making them effective in finding globally optimal solutions. By maintaining diversity through the selection, crossover, and mutation processes, GAs can explore various regions of the solution space simultaneously, increasing the chances of uncovering the best possible solution.

2. Handling Nonlinearity

Many real-world optimization problems exhibit nonlinear relationships between variables, making them challenging to tackle using traditional optimization methods. Genetic Algorithms excel at handling nonlinearity by considering multiple variables concurrently and exploring the solution space in a parallel and population-based manner.

3. Robustness

GAs are generally robust against noisy or incomplete data. The use of the fitness function enables the algorithm to evaluate the quality of potential solutions based on the available data, allowing for effective optimization even in the presence of uncertainties.

4. Versatility

Genetic Algorithms can be customized and adapted to a wide range of problem domains. By appropriately encoding the solutions and defining the fitness function, GAs can be applied to optimization problems across various fields, including engineering, finance, bioinformatics, and more.

Conclusion

Genetic Algorithms provide a powerful optimization technique inspired by natural selection. They demonstrate the ability to effectively explore solution spaces and find near-optimal solutions, making them a valuable tool in machine learning optimization. By incorporating concepts like population, fitness function, selection, crossover, mutation, and elitism, GAs optimize solutions through multiple generations, leveraging the principles of evolutionary biology.

Whether you’re tackling complex machine learning problems or seeking optimizations in various domains, Genetic Algorithms offer a promising approach worth exploring. By harnessing the forces of natural selection, GAs provide a computational method that can lead us closer to optimal solutions and improved performance.

References:

- Holland, J. H. (1992). Genetic algorithms. Scientific American, 267(1), 66-72.

- Goldberg, D. E., & Holland, J. H. (1988). Genetic algorithms and machine learning. Machine learning, 3(2-3), 95-99.