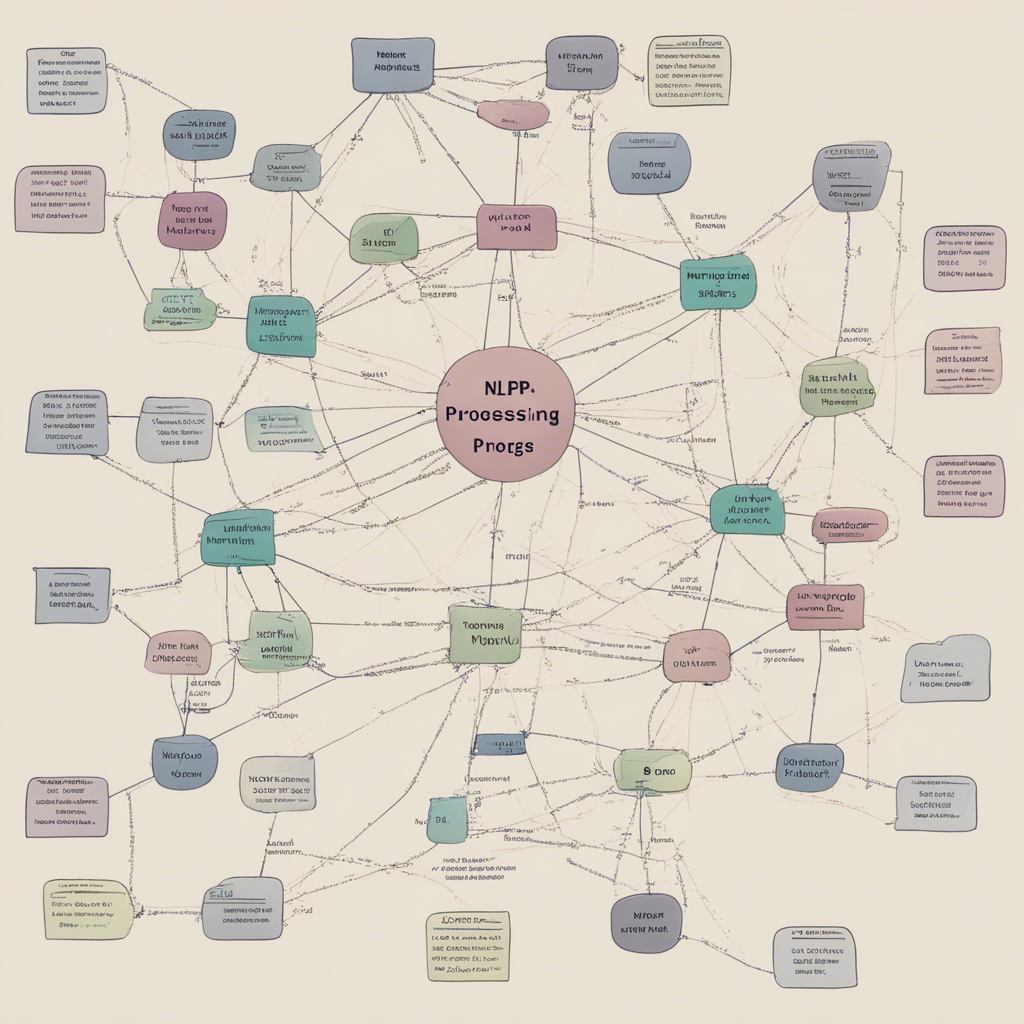

Introduction to Natural Language Processing Concepts

Natural Language Processing (NLP) is a branch of artificial intelligence that focuses on the interaction between humans and computers through natural language. It encompasses various techniques and algorithms that enable computers to understand, interpret, and generate human language in a way that is both meaningful and useful.

In this blog post, we will provide a comprehensive introduction to the key concepts and techniques used in NLP. We will explore various NLP tasks and applications, discuss the challenges involved, and present notable examples and advancements in the field. So, grab a cup of coffee and let’s dive into the fascinating world of NLP!

What is Natural Language Processing (NLP)?

Natural Language Processing (NLP) involves the interaction between computers and human language. It aims to provide computers with the ability to understand and process human language in a way that is similar to how humans do. NLP combines techniques from computational linguistics, artificial intelligence, and machine learning to analyze, interpret, and generate text.

Key Concepts in Natural Language Processing

Tokenization

Tokenization is the process of breaking down a text into smaller units called tokens. These tokens can be individual words, sentences, or even subword units. Tokenization serves as the first step in most NLP tasks and helps in creating a structured representation of the text data.

Part-of-Speech (POS) Tagging

Part-of-speech tagging involves assigning a grammatical category (such as noun, verb, adjective, etc.) to each word in a sentence. This helps in understanding the syntactic structure of the text and is used in tasks like text classification, named entity recognition, and question-answering systems.

Named Entity Recognition (NER)

Named Entity Recognition aims to identify and classify named entities in text into predefined categories such as person names, organization names, locations, dates, etc. This information is essential for various NLP applications like information retrieval, entity linking, and sentiment analysis.

Sentiment Analysis

Sentiment analysis, also known as opinion mining, involves determining the sentiment or emotion expressed in a piece of text. It is used to analyze customer feedback, social media posts, and online reviews to gauge public opinion towards products, services, or events. Techniques such as lexicon-based analysis, machine learning, and deep learning are commonly employed for sentiment analysis.

Machine Translation

Machine translation is the task of automatically translating text from one language to another. It is one of the oldest and most extensively researched areas in NLP. Traditional machine translation approaches relied on rule-based systems, but recent advancements in neural machine translation have yielded significant improvements in translation accuracy and fluency.

Question Answering

Question answering involves designing systems that can provide accurate responses to questions posed in natural language. It requires the understanding of both the question and the relevant context to retrieve the correct answer. Question answering systems can be rule-based or machine learning-based, and recent advancements have been made using pre-trained language models like BERT and GPT.

Text Summarization

Text summarization aims to automatically generate concise summaries of longer pieces of text. This is especially useful for tasks like news article summarization, document indexing, and information retrieval. Approaches to text summarization include extractive methods that select important sentences from the original text and abstractive methods that generate new sentences based on the context.

Language Modeling

Language modeling focuses on predicting the probability of a sequence of words occurring in a given context. It is widely used in applications like speech recognition, machine translation, and text generation. Language models, such as recurrent neural networks (RNNs) and transformers, have achieved remarkable success in various NLP tasks by capturing the statistical properties of language.

Challenges in Natural Language Processing

While NLP has witnessed significant advancements in recent years, there are still several challenges that researchers and practitioners are actively working on. Some of the key challenges in NLP include:

-

Ambiguity: Natural language is inherently ambiguous, with words and phrases often having multiple meanings depending on context. Resolving this ambiguity is crucial for accurate interpretation and understanding.

-

Out-of-vocabulary words: Language evolves constantly, and new words and phrases are constantly being introduced. Dealing with unfamiliar words or terms is a challenge in NLP as traditional lexical resources may not contain such vocabulary.

-

Lack of context: Understanding text requires considering the broader context in which it appears. However, context can be implicit, and capturing it accurately remains a challenge.

-

Domain-specific challenges: Language and terminology vary across different domains, making it difficult to build general-purpose NLP systems. Adapting existing models or building domain-specific models often involves additional data collection and fine-tuning.

Current Trends and Advancements in NLP

NLP is a rapidly evolving field, with several exciting advancements transforming the way we interact with language. Some of the recent trends and notable advancements in NLP include:

-

Transfer Learning: Transfer learning has revolutionized NLP by enabling models to learn general language representations from vast amounts of data. Pre-trained models like BERT, GPT, and T5 have achieved state-of-the-art performance in several NLP tasks by leveraging large-scale pretraining and fine-tuning on specific downstream tasks.

-

Multimodal NLP: Multimodal NLP involves combining text with other modalities like images, videos, and audio to improve understanding and generate more expressive and comprehensive responses. This area has gained prominence due to the increasing availability of multimodal data and the potential for building more robust NLP systems.

-

Ethical and Fair NLP: As NLP systems become more widespread, ensuring fairness and addressing ethical concerns are gaining prominence. Researchers are actively working on mitigating biases in data, algorithms, and applications to create more inclusive and unbiased NLP systems.

Conclusion

Natural Language Processing (NLP) plays a crucial role in enabling computers to understand, interpret, and generate human language. It encompasses various techniques and tasks that have numerous applications in areas like sentiment analysis, machine translation, question answering, and text summarization.

While NLP has made significant advancements, challenges such as ambiguity, out-of-vocabulary words, and lack of context still persist. However, ongoing research, along with the development of powerful pre-trained models and novel approaches, continues to push the boundaries of NLP.

As we move forward, NLP holds immense potential to revolutionize communication between humans and computers, enabling more immersive and natural interactions, and transforming various industries and everyday life as we know it.

References:

[1] Jurafsky, D., & Martin, J. H. (2020). Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition (3rd ed.). Pearson.

[2] Goldberg, Y. (2017). Neural Network Methods in Natural Language Processing. Morgan & Claypool Publishers.

[3] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. In Advances in Neural Information Processing Systems (pp. 6000-6010).

[4] Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI Blog.

[5] Bender, E., & Friedman, B. (2018). Data Statements for Natural Language Processing: Toward Mitigating System Bias and Enabling Better Science. Transactions of the Association for Computational Linguistics, 6, 587-604.