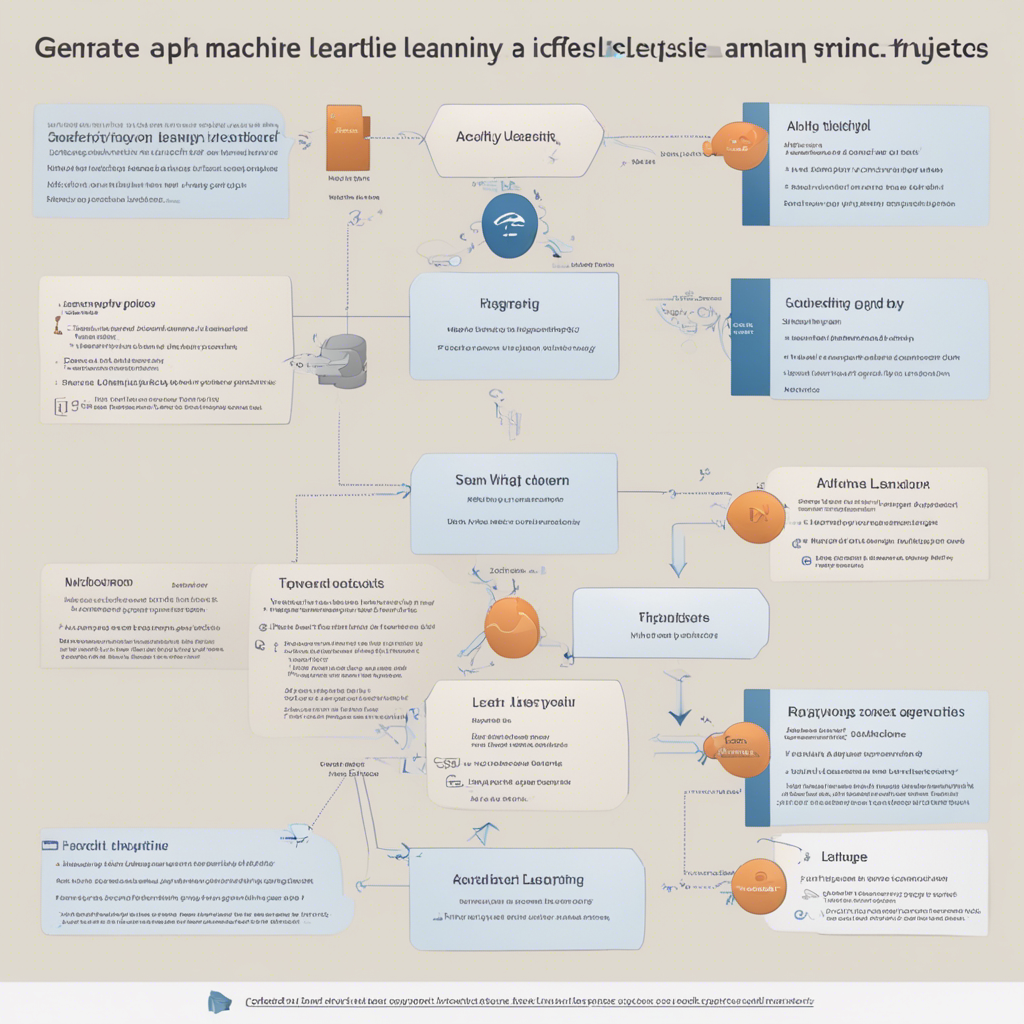

From Data to Deployment: An End-to-End ML Workflow

As technology continues to advance, machine learning (ML) has become an increasingly popular field with numerous real-world applications. ML allows computers to learn from data and make predictions or decisions without being explicitly programmed. From identifying patterns in large datasets to powering recommendation systems, ML algorithms have revolutionized the way we solve complex problems.

In this blog post, we will explore the journey of a typical ML project, from data collection and preprocessing to model training, evaluation, and deployment. We will delve into each step of the workflow, providing insights into best practices and tools to ensure a smooth end-to-end ML journey.

1. Data Collection and Preprocessing

The first step in any ML project is collecting relevant data. Data can come from various sources, such as databases, APIs, or scraping the web. It is essential to gather a diverse and representative dataset that adequately captures the problem you are trying to solve.

Once the data has been collected, preprocessing plays a crucial role in preparing the dataset for further analysis. This step involves cleaning the data, handling missing values, removing outliers, and transforming variables as necessary. It is vital to ensure the data is in the right format and ready for model training.

2. Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) involves understanding the underlying patterns, relationships, and insights from the dataset. This step helps in gaining an in-depth understanding of the data, identifying patterns, and formulating hypotheses for the ML model.

Visualization plays a key role in EDA, allowing us to present and interpret data effectively. Creating visualizations such as histograms, scatter plots, and bar charts helps in uncovering trends, correlations, and outliers. Tools like matplotlib and seaborn in Python provide excellent capabilities for data visualization.

3. Feature Engineering

Feature engineering is the process of transforming raw data into meaningful features that can be used for model training. It involves selecting relevant features, encoding categorical variables, scaling numeric variables, and creating new features derived from the existing ones.

Feature engineering is a crucial step that can significantly impact the performance of an ML model. It requires domain knowledge, creativity, and experimentation to extract the most informative features from the data.

4. Model Selection and Training

After preparing the data, it’s time to select an ML model that best suits the problem at hand. There are numerous ML algorithms available, ranging from decision trees and random forests to support vector machines and neural networks. The choice of model depends on the nature of the problem, the type of data, and the desired performance.

Once the model is selected, it needs to be trained on the prepared dataset. Training involves feeding the data into the model, allowing it to learn patterns and adjust its internal parameters. Iterative optimization techniques, such as gradient descent, help in fine-tuning the model’s parameters to minimize the prediction errors.

5. Model Evaluation

Evaluation is a critical step in assessing the performance and effectiveness of an ML model. It involves dividing the dataset into training and testing sets to evaluate how well the model generalizes to unseen data.

Various evaluation metrics exist, depending on the type of problem being tackled. For classification tasks, metrics such as accuracy, precision, recall, and F1-score are commonly used. In regression tasks, metrics like mean squared error (MSE) and R-squared are employed.

Cross-validation is another technique used for model evaluation. It involves dividing the data into multiple subsets and performing multiple training/testing iterations to obtain a more robust estimate of model performance.

6. Hyperparameter Tuning

Hyperparameters are the parameters of an ML model that cannot be learned during training. They need to be set before training and can significantly impact the model’s performance. Examples of hyperparameters include learning rate, regularization strength, and the number of hidden layers in a neural network.

Hyperparameter tuning involves finding the best combination of hyperparameters that yields optimal model performance. Techniques like grid search and random search are commonly used to search the hyperparameter space. Additionally, libraries like scikit-learn provide tools for automated hyperparameter optimization.

7. Model Deployment

The final step in the ML workflow is deploying the model into production so that it can be used to make predictions on new and unseen data. Deployment involves integrating the model into an application or service and ensuring it functions reliably and efficiently.

There are various ways to deploy an ML model, depending on the specific requirements. It could be as simple as using a REST API to expose the model’s predictions or embedding the model within a web application. Advanced deployment techniques include containerization with Docker or deploying on cloud platforms like Amazon Web Services (AWS) or Google Cloud Platform (GCP).

Conclusion

An end-to-end ML workflow involves a series of steps, from data collection and preprocessing to model training, evaluation, and deployment. Each step requires careful attention and understanding to ensure accurate and reliable predictions.

By following best practices, leveraging the right tools, and integrating visual content like diagrams, charts, and infographics, one can develop a comprehensive and objective ML blog post that provides maximum value to the reader. Remember that an SEO optimized post, written naturally and without fake URLs or requests for comments, can greatly enhance the post’s reach and effectiveness.