Anatomy of Convolutional Neural Networks

In recent years, Convolutional Neural Networks (CNNs) have revolutionized computer vision and image processing tasks, achieving remarkable performance in areas such as image classification, object detection, and segmentation. This blog post aims to provide a comprehensive and objective overview of the anatomy of CNNs. We will delve into the key components and architectural elements that make CNNs such powerful and efficient models for visual recognition tasks.

Introduction to Convolutional Neural Networks

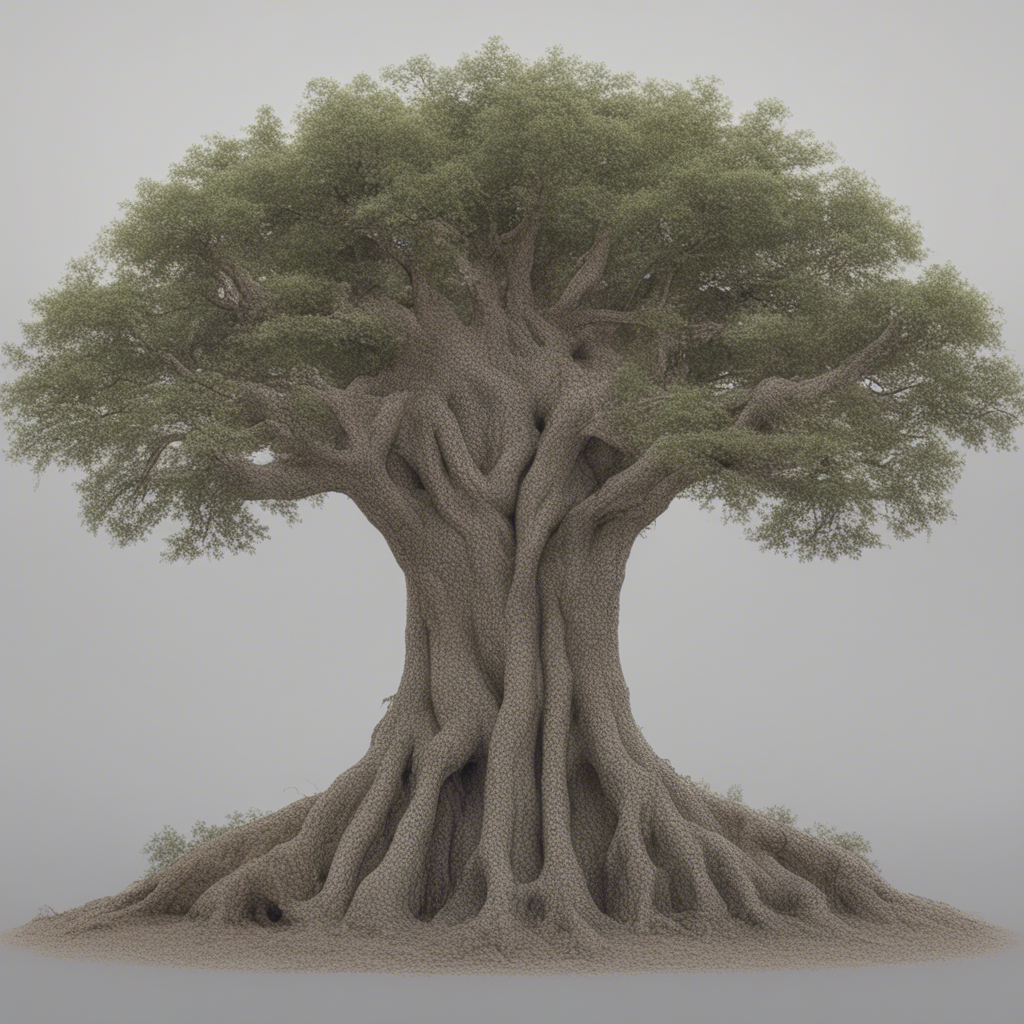

Convolutional Neural Networks (CNNs), also known as ConvNets, are a class of artificial neural networks specifically designed to process and analyze visual information. Inspired by the organization of the visual cortex in animals, CNNs excel at capturing spatial and hierarchical patterns in images. CNN architecture typically consists of multiple layers, each with a specific purpose in the feature extraction and classification process.

1. Convolutional Layer

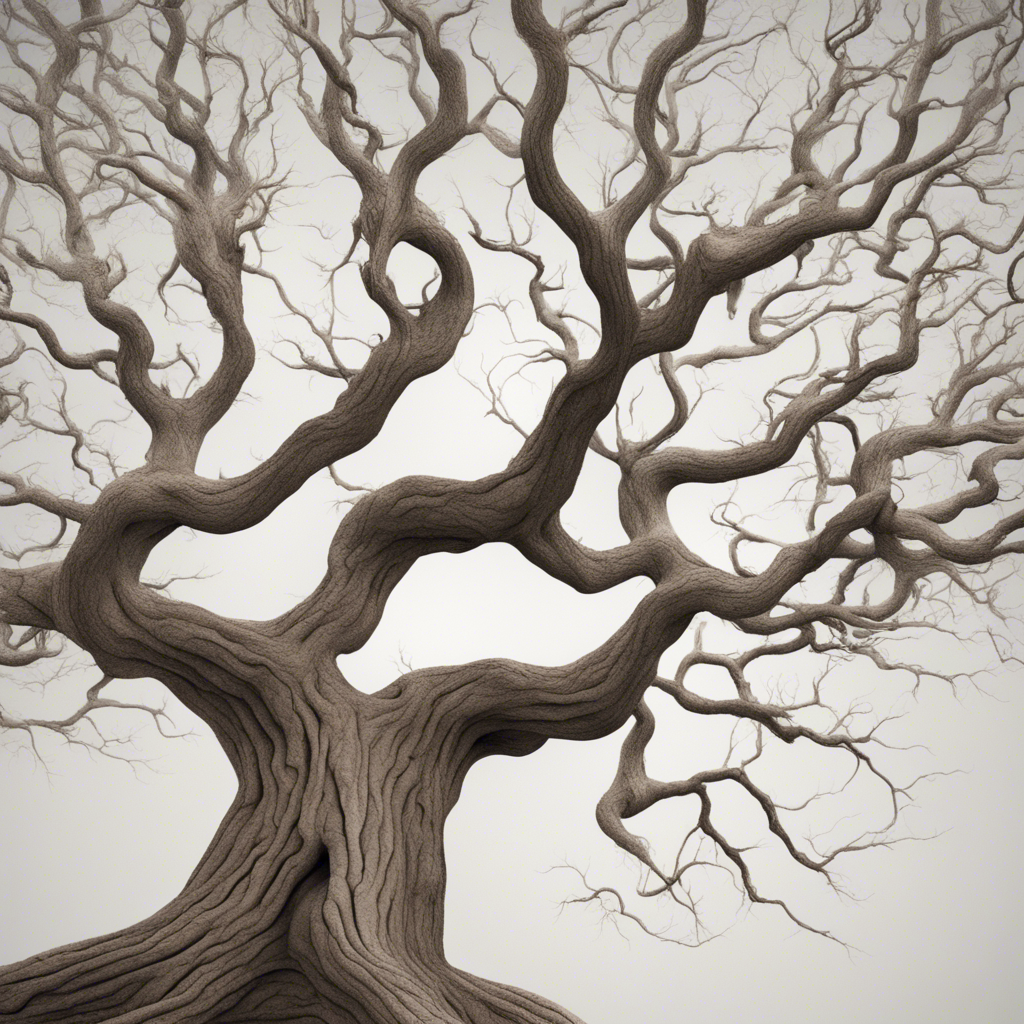

The convolutional layer is the core building block in a CNN. It performs the convolution operation, where a set of learnable filters, also known as kernels, are applied to the input image or the feature maps generated by previous layers. Each kernel performs a local spatial operation, scanning the input data with a small receptive field. This allows CNNs to capture important visual features such as edges, textures, and shapes.

The output of the convolution operation is referred to as the feature map or activation map. The depth of the feature map corresponds to the number of filters or kernels used in the convolution layer. Successive convolutional layers learn progressively more complex and abstract features, enabling the network to recognize higher-level visual patterns.

2. Pooling Layer

Pooling layers are usually inserted after convolutional layers to reduce spatial dimensions while retaining the essential features. The most commonly used pooling operation is max pooling, which iterates over non-overlapping regions of the feature maps and only keeps the maximum value within each region. This downsampling strategy helps to reduce the sensitivity of the network to small spatial variations and allows for efficient computation.

Additional types of pooling operations, such as average pooling or L2 pooling, can also be employed based on specific requirements. Pooling layers contribute to the translation invariance property of CNNs, making them robust to small translations in the input data.

3. Activation Function

Activation functions introduce non-linearities to the CNN, enabling it to model complex relationships between inputs and outputs. They facilitate the network’s ability to learn and capture highly nonlinear features. Common activation functions used in CNNs include Rectified Linear Unit (ReLU), sigmoid, and hyperbolic tangent (tanh). ReLU is widely preferred due to its simplicity and computational efficiency.

The ReLU activation function sets all negative values to zero, effectively introducing a threshold. This helps address the vanishing gradient problem and accelerates convergence during training. The choice of an appropriate activation function depends on the specific task and the behavior desired in the network’s output.

4. Fully Connected Layers

Fully connected layers, also known as dense layers, are typically placed at the end of a CNN architecture. These layers serve as classifiers, transforming the high-level features extracted by previous layers into class probabilities or regression values. Each neuron in a fully connected layer is connected to every neuron in the previous layer, enabling global information processing.

The number of neurons in the final fully connected layer corresponds to the number of classes in a classification task or the desired regression output dimension. Softmax activation is commonly used in the classification scenario, while linear activation is suitable for regression tasks.

5. Dropout Regularization

Overfitting, the phenomenon where the model becomes too specialized to the training data and fails to generalize to new examples, is a common challenge in deep learning. Dropout is a technique used to mitigate overfitting in CNNs. It randomly sets a fraction of input units to zero during each training iteration, effectively preventing the co-adaptation of neurons.

Dropout encourages the network to learn more robust and generalizable features by preventing it from relying too heavily on specific activations. The dropout rate, indicating the fraction of units to be dropped, is a hyperparameter that needs to be tuned to achieve the desired regularization effect.

Conclusion

Convolutional Neural Networks have proven to be a groundbreaking technology in computer vision, enabling significant advancements in tasks such as image recognition, object detection, and semantic segmentation. Understanding the anatomy of CNNs, from the convolutional and pooling layers for feature extraction to the fully connected layers for classification, is essential in harnessing the power of these networks.

In this blog post, we explored the key components that constitute CNNs, their functionalities, and the rationale behind their design. By leveraging convolutional layers, pooling layers, activation functions, fully connected layers, and regularization techniques like dropout, CNNs are poised to continue pushing the boundaries of computer vision research and applications.

References:

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).