An Intro to Graph Neural Networks and Applications

Graph Neural Networks (GNNs) have emerged as powerful tools for analyzing and modeling complex network data. With the ability to effectively represent and reason about relationships and dependencies in graphs, GNNs offer new opportunities across various domains such as social networks, recommendation systems, cybersecurity, and biology. In this blog post, we will provide an introduction to GNNs, discuss their key components, and explore their applications in different fields.

Introduction to Graph Neural Networks

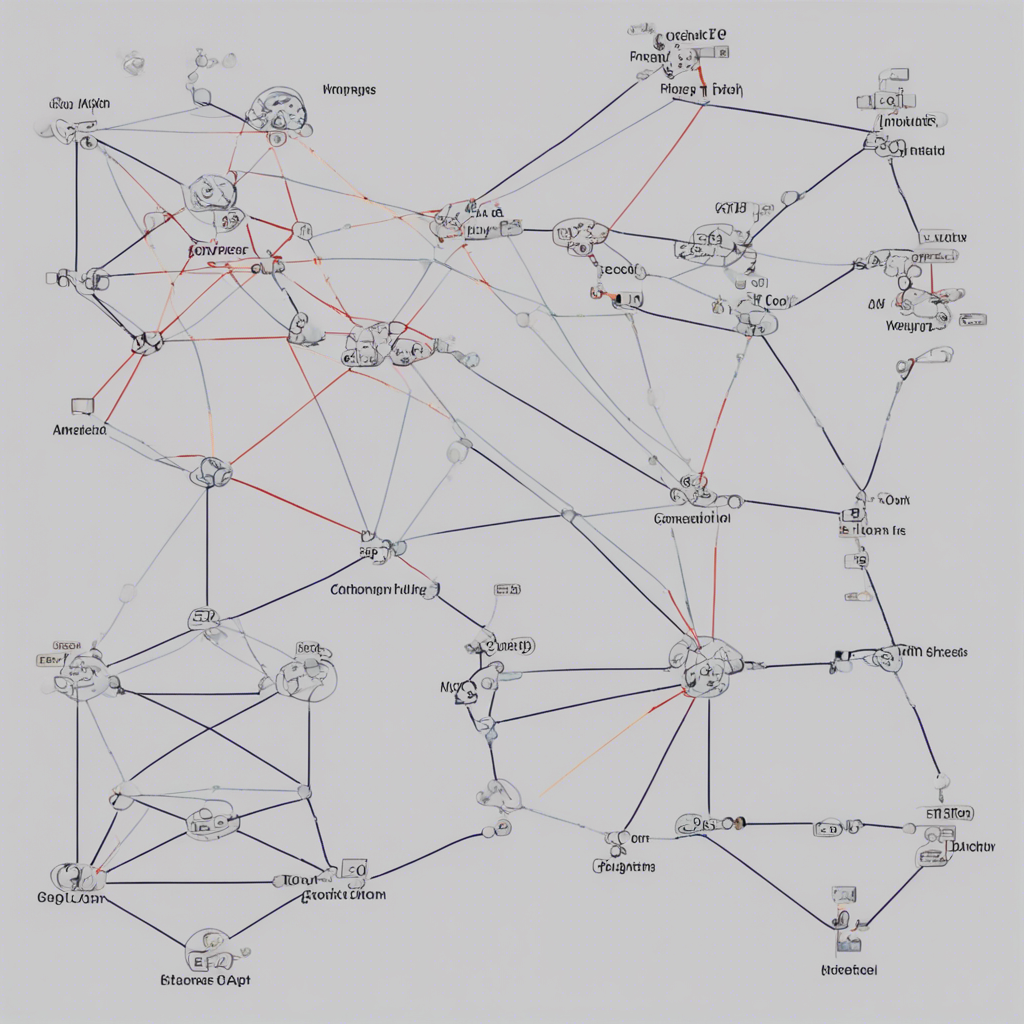

A graph can be defined as a collection of nodes (also known as vertices) connected by edges (links). It can represent a wide range of real-world phenomena, including social networks, transportation systems, and molecular structures. Traditional neural networks are not well-suited for processing graph data, as they assume that inputs are independent of each other. GNNs address this limitation by incorporating graph structure and neighboring information to make more informed predictions.

Representation Learning in GNNs

The core idea behind GNNs is to learn useful representations of nodes in a graph. By aggregating and transforming information from neighboring nodes, GNNs can capture higher-order dependencies and produce node embeddings that encode both structural and attribute information.

At each layer of a GNN, nodes update their representations by aggregating informationfrom their neighbors. This aggregation process is typically performed using a neighborhood aggregation function, such as the sum, mean, or max operation. After aggregation, a node applies a transformation function to incorporate its own features and update its representation. The updated node embeddings are then used as input for subsequent layers, allowing the model to capture increasingly complex patterns.

Message Passing in GNNs

The message passing mechanism is at the heart of GNNs and facilitates information propagation across the graph. During message passing, each node exchanges information with its neighbors and updates its own representation based on the received messages. This allows nodes to exchange local information and propagate it through the entire graph, enabling GNNs to capture global dependencies.

The message passing process can be formulated using mathematical equations. Given an initial set of node representations, the message passing equations are iteratively applied until convergence is reached. This iterative process enables nodes to gather and update information from their immediate neighborhood and influencetheir neighbors through successive iterations.

Types of Graph Neural Networks

Several variants of GNNs have been proposed, each with its own architectural design and use cases. Let’s take a look at some of the most popular types of GNNs:

Graph Convolutional Networks (GCNs)

Graph Convolutional Networks (GCNs) were one of the first successful GNN architectures introduced by Kipf and Welling in 2016. GCNs extend the traditional convolutional neural network (CNN) concept to graphs by defining a convolution operation on the graph’s adjacency matrix. This operation aggregates information from a node’s immediate neighbors and updates its representation.

GCNs have been widely adopted due to their simplicity and effectiveness. They have been successfully applied to tasks such as node classification, link prediction, and graph classification.

GraphSAGE

GraphSAGE (Graph Sample and Aggregated) is a variant of GNNs designed to scale to larger graphs. Instead of considering the entire graph during each iteration, GraphSAGE randomly samples a fixed-size neighborhood for each node. This sampling strategy reduces the computational complexity while still allowing nodes to learn from a diverse set of neighbors.

GraphSAGE has demonstrated excellent performance on large-scale graph datasets and has been used for tasks such as recommendation systems and large-scale social network analysis.

Graph Attention Networks (GATs)

Graph Attention Networks (GATs) introduced an attention mechanism into GNNs. Attention allows nodes to dynamically weigh the importance of their neighbors’ messages during message passing. This enables GATs to focus on the most relevant information while effectively aggregating information from different parts of the graph.

GATs have achieved state-of-the-art performance on various graph-related tasks, including node classification, link prediction, and social influence prediction.

Applications of Graph Neural Networks

Graph Neural Networks have found wide-ranging applications across numerous domains. Here are some notable examples:

Social Network Analysis

Social networks represent relationships between individuals, and GNNs excel at understanding social dynamics and predicting user behavior. GNNs have been used in identifying influential individuals, predicting user preferences, and recommending connections in social networks.

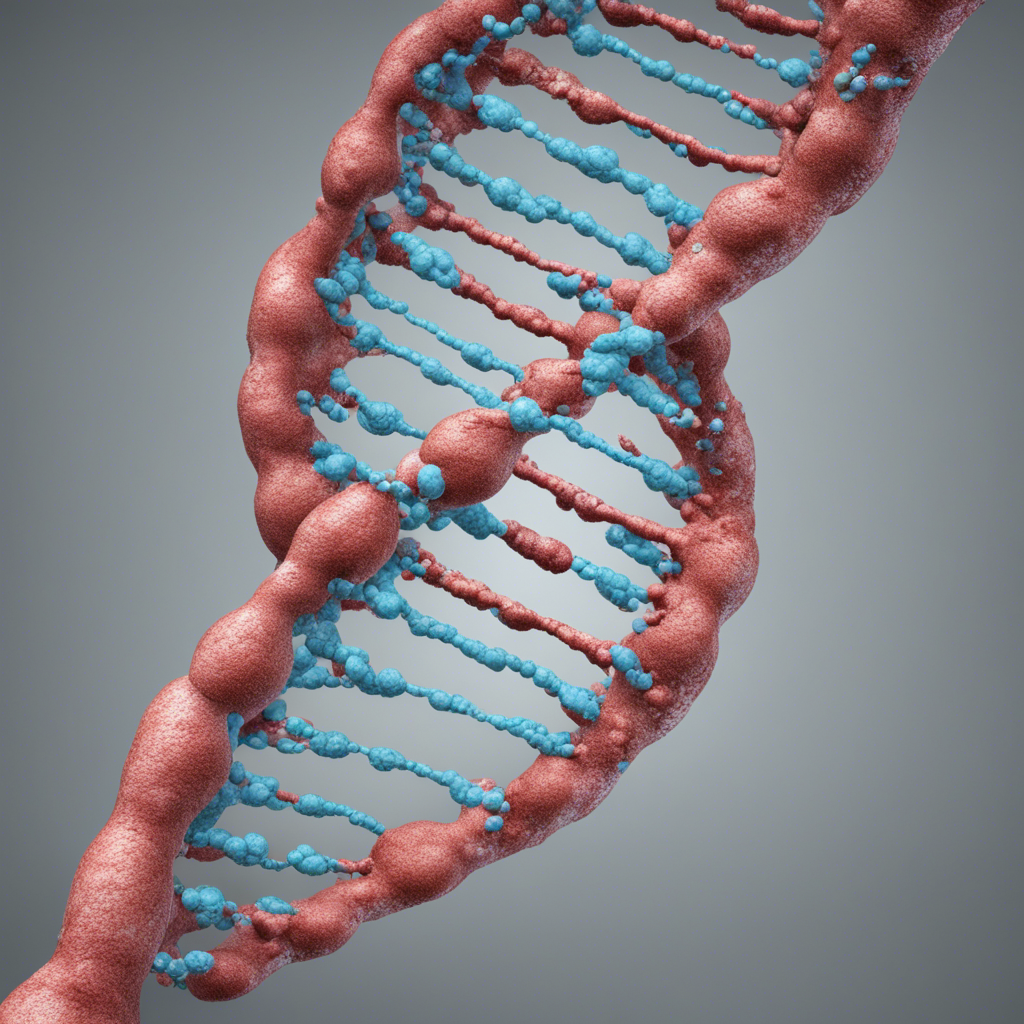

Drug Discovery

The analysis of molecular graphs is crucial in drug discovery. GNNs have shown promise in predicting chemical properties, virtual screening of potential drugs, and identifying molecular structures with desired properties. By leveraging the graph structure underlying chemical compounds, GNNs facilitate more efficient drug discovery processes.

Recommender Systems

Recommender systems are widely used to personalize user experiences by suggesting relevant items. GNNs can model users, items, and their relationships as a graph, enabling personalized recommendations based on similarity and connectivity in the graph. GNN-based recommender systems have demonstrated improved accuracy and flexibility compared to traditional methods.

Cybersecurity

GNNs can help identify malicious activities and detect anomalous patterns in network traffic data. By leveraging graph representations of network traffic, GNNs can identify patterns of attacks and predict potential vulnerabilities to enhance network security.

Conclusion

Graph Neural Networks have revolutionized the field of graph analysis and modeling. By effectively incorporating graph structure and neighboring information, GNNs enable powerful learning and reasoning capabilities on complex network data. From social network analysis to drug discovery, GNNs have proven their versatility and potential across various domains. As research continues to advance, we can expect GNNs to make significant contributions to solving complex real-world problems.

Note: This blog post is an introduction to Graph Neural Networks. For a deeper understanding and more technical details, we recommend referring to the referenced papers and resources in the “References” section.

References

- Kipf, T.N., & Welling, M. (2017). Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907.

- Hamilton, W., Ying, R., & Leskovec, J. (2017). Inductive representation learning on large graphs. Advances in Neural Information Processing Systems, 30, 1025-1035.

- Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., & Bengio, Y. (2018). Graph Attention Networks. International Conference on Learning Representations, 2018.

- Wu, Z., Pan, S., Long, G., Jiang, J., Zhang, C., & Zhang, C. (2020). A comprehensive survey on graph neural networks. arXiv preprint arXiv:1901.00596.

Image source: Unsplash