How Decision Trees Make Complex Decisions Simple

In today’s rapidly evolving world, decision-making has become increasingly complex. From business strategies to medical diagnoses, individuals and organizations face numerous options and variables that make choosing the best course of action challenging. However, decision trees offer a powerful tool that simplifies the decision-making process, helping us make informed choices with ease and precision. In this blog post, we will explore the concept of decision trees, their applications, and how they make complex decisions simple.

Understanding Decision Trees

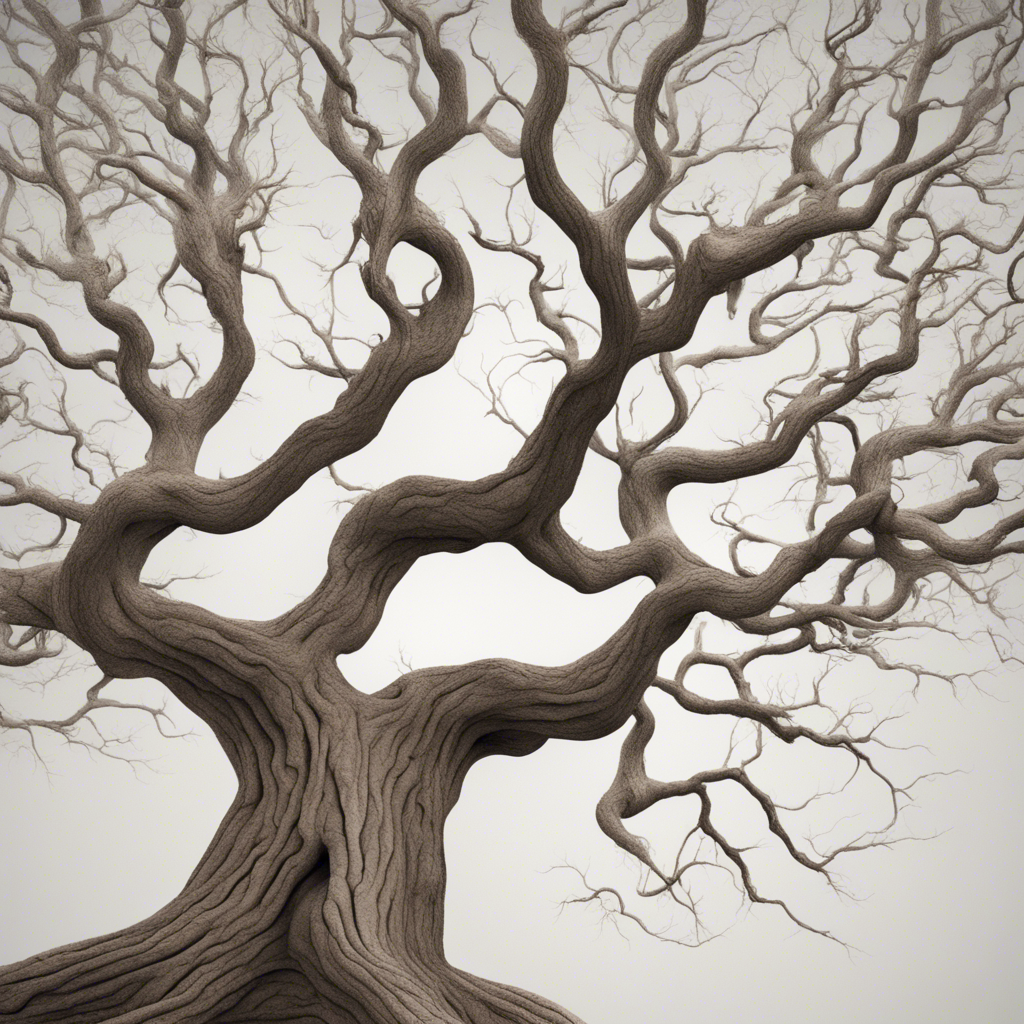

A decision tree is a graphical representation of a decision-making model. It consists of nodes, branches, and leaves, where each node represents a choice or decision, branches represent possible outcomes, and leaves represent the final decision or outcome. Decision trees are commonly used in fields such as data analysis, machine learning, and operations research.

Components of a Decision Tree

To further grasp the concept of decision trees, let’s break down their key components:

- Root Node: Represents the initial decision or starting point.

- Internal Nodes: Represent subsequent decisions or choices.

- Branches: Connect nodes and indicate possible outcomes for each decision.

- Leaves: Terminal nodes that represent final decisions or outcomes.

Decision Tree Algorithm

To construct a decision tree, we employ a decision tree algorithm, which follows these steps:

- Select the best predictor variable: The algorithm determines the most influential variable to make the first decision based on its ability to discriminate between the possible outcomes.

- Split the data: The data is divided into subsets based on the value of the selected predictor variable.

- Recur: The algorithm recursively performs the above steps on each subset, creating branches and internal nodes until a stopping condition is met.

- Assign outcomes: Once the stopping condition is met, the algorithm assigns final outcomes to the leaves based on the majority or other defined criteria.

Applications of Decision Trees

Decision trees find applications in various domains, including but not limited to:

Business and Marketing

Businesses commonly utilize decision trees to make strategic choices such as market segmentation, product pricing, and resource allocation. Decision trees provide clear and intuitive visualizations of the decision-making process, enabling companies to optimize their strategies and enhance profitability.

Medicine and Healthcare

In the medical field, decision trees are vital for disease diagnosis, treatment selection, and patient risk assessment. By considering numerous patient characteristics, symptoms, and test results, decision trees aid healthcare professionals in making accurate and efficient decisions, ultimately improving patient outcomes.

Finance and Investment

Decision trees play a crucial role in financial planning, especially when analyzing investment decisions and risk assessment. These models consider factors such as market conditions, economic indicators, and risk tolerance, allowing investors to make informed choices and minimize potential losses.

Customer Service and Support

Customer service departments often employ decision trees to guide support staff through a series of questions and actions to resolve customer queries efficiently. The systematic approach of decision trees helps ensure consistent and satisfactory customer experiences without requiring extensive training for support agents.

Advantages of Decision Trees

The use of decision trees offers several advantages over other decision-making approaches:

-

Simplicity and Interpretability: Decision trees present complex decision-making processes in a visually intuitive and easy-to-understand manner, making them accessible to individuals with varying levels of expertise.

-

Efficiency: Decision trees excel in scenarios with large datasets, as their split-and-branch mechanism allows for efficient analysis and processing.

-

Nonlinear Relationships: Decision trees can handle complex relationships between variables, including nonlinear interactions, making them appropriate for a wide range of decision problems.

-

Robustness: Decision trees are resistant to outliers and missing data, allowing for robust decision-making even in imperfect or incomplete information environments.

Conclusion

Decision trees provide a holistic framework for simplifying complex decision-making processes. Their intuitive graphical representation, coupled with the ability to handle large datasets and nonlinear relationships, makes them a valuable tool in numerous fields. By leveraging decision trees, organizations and individuals can make informed choices, optimize their strategies, and improve their overall decision-making capabilities.

By applying decision tree algorithms and adapting them to specific domains, practitioners can tailor decision trees to their unique requirements. As technology continues to advance, decision trees will likely become even more sophisticated and influential in helping us navigate complex decisions effectively and efficiently.